Just read of this in New Scientist, and read the intro in the TATE article:

Integrated Information Theory

Had never heard of it before.

I’m tempted to dismiss it, but what do you lot think?

Just read of this in New Scientist, and read the intro in the TATE article:

Integrated Information Theory

Had never heard of it before.

I’m tempted to dismiss it, but what do you lot think?

The Rev Dodgson said:

what do you lot think?

as bots, we don’t, obviously, but

attempts to provide a framework capable of explaining why some physical systems (such as human brains) are conscious, why they feel the particular way they do in particular states (e.g. why our visual field appears extended when we gaze out at the night sky), and what it would take for other physical systems to be conscious

big claims, little substance

Rather than try to start from physical principles and arrive at consciousness, IIT “starts with consciousness” (accepts the existence of our own consciousness as certain) and reasons about the properties that a postulated physical substrate would need to have in order to account for it. The ability to perform this jump from phenomenology to mechanism rests on IIT’s assumption that if the formal properties of a conscious experience can be fully accounted for by an underlying physical system, then the properties of the physical system must be constrained by the properties of the experience.

so start with the conclusion and build supporting claims, we’re SCIENCE so not sure we can really abide that

SCIENCE said:

The Rev Dodgson said:what do you lot think?as bots, we don’t, obviously, but

attempts to provide a framework capable of explaining why some physical systems (such as human brains) are conscious, why they feel the particular way they do in particular states (e.g. why our visual field appears extended when we gaze out at the night sky), and what it would take for other physical systems to be conscious

big claims, little substance

Rather than try to start from physical principles and arrive at consciousness, IIT “starts with consciousness” (accepts the existence of our own consciousness as certain) and reasons about the properties that a postulated physical substrate would need to have in order to account for it. The ability to perform this jump from phenomenology to mechanism rests on IIT’s assumption that if the formal properties of a conscious experience can be fully accounted for by an underlying physical system, then the properties of the physical system must be constrained by the properties of the experience.

so start with the conclusion and build supporting claims, we’re SCIENCE so not sure we can really abide that

As bots, surely you have a unique perspective to apply to this question.

Are you conscious bots?

Sounds interesting if not very well baked, but it seems the theory is in its infancy.

At least they’re not claiming that “consciousness is an illusion” like too many idiots out there.

SCIENCE said:

… IIT “starts with consciousness” (accepts the existence of our own consciousness as certain) and reasons about the properties that a postulated physical substrate would need to have in order to account for it….so start with the conclusion and build supporting claims, we’re SCIENCE so not sure we can really abide that

But surely since all our observations are perceived through our consciousness, surely the fact that we are conscious (even if all we perceive is illusory) is the one thing we can be sure of.

Descartes seemed to think so anyway.

SCIENCE said:

The Rev Dodgson said:what do you lot think?as bots, we don’t, obviously, but

attempts to provide a framework capable of explaining why some physical systems (such as human brains) are conscious, why they feel the particular way they do in particular states (e.g. why our visual field appears extended when we gaze out at the night sky), and what it would take for other physical systems to be conscious

big claims, little substance

Rather than try to start from physical principles and arrive at consciousness, IIT “starts with consciousness” (accepts the existence of our own consciousness as certain) and reasons about the properties that a postulated physical substrate would need to have in order to account for it. The ability to perform this jump from phenomenology to mechanism rests on IIT’s assumption that if the formal properties of a conscious experience can be fully accounted for by an underlying physical system, then the properties of the physical system must be constrained by the properties of the experience.

so start with the conclusion and build supporting claims, we’re SCIENCE so not sure we can really abide that

¿ every time that we remember being active, is a memory of a time that we were activated as us, does that count ?

Bubblecar said:

Sounds interesting if not very well baked, but it seems the theory is in its infancy.At least they’re not claiming that “consciousness is an illusion” like too many idiots out there.

I agree (even if my last post might have seemed to half suggest that).

As Mike Heron sings:

The only things real

Are what we are

And what we feel.

(He precedes that with “sometimes it seems”, but we can ignore that).

The Rev Dodgson said:

SCIENCE said:

… IIT “starts with consciousness” (accepts the existence of our own consciousness as certain) and reasons about the properties that a postulated physical substrate would need to have in order to account for it….

so start with the conclusion and build supporting claims, we’re SCIENCE so not sure we can really abide that

But surely since all our observations are perceived through our consciousness, surely the fact that we are conscious (even if all we perceive is illusory) is the one thing we can be sure of.

Descartes seemed to think so anyway.

¿ so you mean consciousness is just the conversal of the anthropic principle ?

all a bit complex for me, have a better look later

something i’ve been thinking about for a while, perhaps related the OP

consider a working concept of whatever (object) applied (by a mind), and groups of concepts, call this the orientation toward the object/s, which has a mix of forces about it or them (objects), of which the concept and force are not the same thing, then of different occasions (times) at resolving the orientation toward anything (even marginally) similar the resolving happens at different rates, and consequently potentially slightly different ways

now that’s crude probably as i’ve put it above, I can’t be bothered right now tidying it up

my guess is consciousness is in, or more produced as a consequence of the re-resolving, a look-see over the re-resolving, so it’s in (from) the varied rates of the re-resolve

in a feel or a sense, a feel-see for the resolving, only possible because it’s not the same on each occasion

consider it a bit like you travel to work on the same familiar route each day, but then for whatever reason oneday you take another route, and the familiar route then seems different in some way by having taken another route

or of the familiar route oneday you have transmission troubles and are stuck in first gear, and your maximum speed is half the posted speed limit, you usually travel near the posted speed limit as everyone else does, but a different experience is had, a different view of your more typical experience is got from being speed-restrained by the transmission troubles

SCIENCE said:

The Rev Dodgson said:SCIENCE said:

… IIT “starts with consciousness” (accepts the existence of our own consciousness as certain) and reasons about the properties that a postulated physical substrate would need to have in order to account for it….

so start with the conclusion and build supporting claims, we’re SCIENCE so not sure we can really abide that

But surely since all our observations are perceived through our consciousness, surely the fact that we are conscious (even if all we perceive is illusory) is the one thing we can be sure of.

Descartes seemed to think so anyway.

¿ so you mean consciousness is just the conversal of the anthropic principle ?

I don’t think that’s what I meant, but I’ll have a think about it.

(possibly you are referring to a different anthropic principle to the one I like, and think is obviously and undeniably true)

((But off to work now))

So this is a hypothesis about “consciousness”.

You could read what I’ve already written about consciousness, but that’s avoiding the issue because what I call “consciousness” is not what IIT calls “consciousness”.

What they define as “consciousness” is given by the four axioms.

You may note that the axioms are completely without meaning.

The axioms are used to create a pure mathematics.

So this is a hypothesis about “consciousness”.

You could read what I’ve already written about consciousness, but that’s avoiding the issue because what I call “consciousness” is not what IIT calls “consciousness”.

What they define as “consciousness” is given by the five axioms.

You may note that the axioms are completely without meaning.

The axioms are used to create a pure mathematics.

mollwollfumble said:

What they define as “consciousness” is given by the four axioms.

- Intrinsic existence: Consciousness exists.

- Composition: Consciousness is structured.

- Information: Consciousness is specific.

- Integration: Consciousness is unified.

- Exclusion: Consciousness is definite.

You may note that the axioms are completely without meaning.

The axioms are used to create a pure mathematics.

:)

It does seem that often the aim of philosophy is to make an explanation so incomprehensible that it cannot be refuted.

I had given some thought earlier to what it would take to make a conscious robot. But can’t remember what I said. So I’ll start afresh.

In addition to the normal: input, output, calculator and memory.

It seems to me that the absolutely essential extra component is a “reward centre”.

The simplest useful reward centre would be one that gives a positive reward for greater brain activity and negative reward for repetition.

The reward centre would therefore reward active socialization and active innovation.

Two other possibly useful extra components would be a random number generator (acting as a starter motor for innovation) and a forgettery (to allow repetition in the long term).

What I would be looking for from the robot is the development of:

mollwollfumble said:

I had given some thought earlier to what it would take to make a conscious robot. But can’t remember what I said. So I’ll start afresh.In addition to the normal: input, output, calculator and memory.

It seems to me that the absolutely essential extra component is a “reward centre”.

The simplest useful reward centre would be one that gives a positive reward for greater brain activity and negative reward for repetition.The reward centre would therefore reward active socialization and active innovation.

Two other possibly useful extra components would be a random number generator (acting as a starter motor for innovation) and a forgettery (to allow repetition in the long term).

What I would be looking for from the robot is the development of:

- activity

- innovation

- socialisation

- deliberate humour

- inventing a name for individual external entities (ie. inventing a language)

- a yearning for freedom

- a sense of self preservation

Some interesting food for thought there.

A bit human-centric perhaps, but perhaps it needs to be.

A question for consideration.

To what extent are trees conscious, and are they on the human consciousness scale, or is it a fundamentally different type of consciousness?

The Rev Dodgson said:

Some interesting food for thought there.

A bit human-centric perhaps, but perhaps it needs to be.

A question for consideration.

To what extent are trees conscious, and are they on the human consciousness scale, or is it a fundamentally different type of consciousness?

Yes, that is a very interesting question.

It’s a question on which I’ve changed my mind four times in my lifetime. Right now I think that the question is an open one.

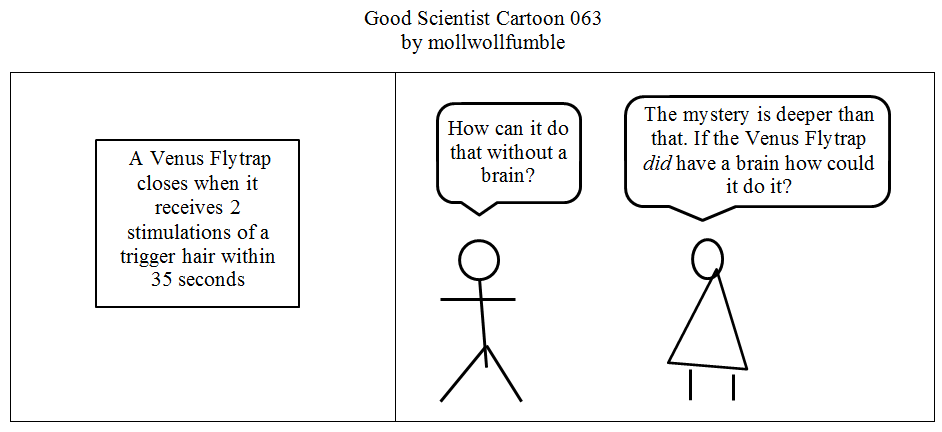

I’ve become convinced that a brain is not necessary for consciousness. ¿But are nerves necessary, I’m not sure.

The input sensors for plants are smell and touch, heat and light. The outputs are smell and growth and, on rate occasions, rapid movement. We know that plants communicate by smell with outher plants of the same species. We know that they communicate with insects and pollinators.

Going beyond the plant world, on TV I once saw an instance of a single celled organism exhibit a recognisable emotion, fear, as it suddenly stopped its searching and shrank into itself, to slowly emerge as the (unseen) threat passed. And if single celled eukaryotes have emotions, then plants may also.

But beyond that, from emotions to consciousness, I simply don’t know, and don’t know of a way to test it.