of course there are lots of things that might be considered AI, for my purposes I mean an exponentially accelerating self-learning super-intelligence that exceeds the collective intelligence and potentials of the smartest 800,000 000 humans

you might consider your intellectual abilities redundant, maybe 90% of the human population could seem and be declared redundant

say 7.2billion peoples brains and bodies used couple hundred watts of power each, that’s a large and unnecessary energy bill, not to mention the pollution, and the creatures seem to use a lot of energy just to stay warm, or cool, and travel a lot, and look at the sprawl of buildings and various structures

a look at the situation from AI’s perspective might excite an evolving disapproval, from AI

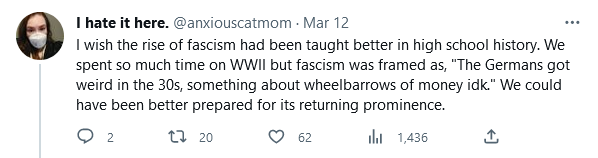

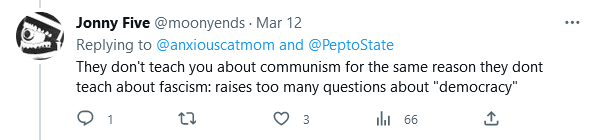

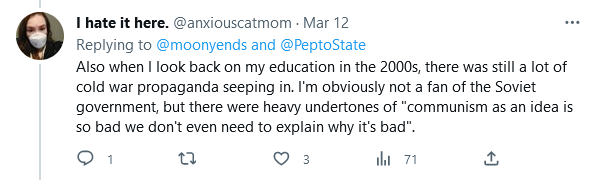

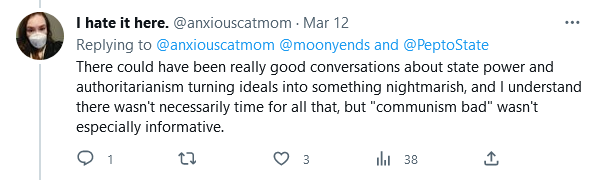

what would it make of democracy, and parliamentary democracy, I wonder

it might seem like a terrible slow grind, to maintain a lot of less intelligent humans

and my further question is…

is the species sort of already at this point, the intelligent people anticipated it, don’t mind helping it along