How Easy Is It to Fool A.I.-Detection Tools?

By Stuart A. Thompson and Tiffany Hsu

June 28, 2023

The pope did not wear Balenciaga. And filmmakers did not fake the moon landing. In recent months, however, startlingly lifelike images of these scenes created by artificial intelligence have spread virally online, threatening society’s ability to separate fact from fiction.

To sort through the confusion, a fast-burgeoning crop of companies now offer services to detect what is real and what isn’t.

Their tools analyze content using sophisticated algorithms, picking up on subtle signals to distinguish the images made with computers from the ones produced by human photographers and artists. But some tech leaders and misinformation experts have expressed concern that advances in A.I. will always stay a step ahead of the tools.

To assess the effectiveness of current A.I.-detection technology, The New York Times tested five new services using more than 100 synthetic images and real photos. The results show that the services are advancing rapidly, but at times fall short.

Consider this example:

GENERATED BY A.I.

This image appears to show the billionaire entrepreneur Elon Musk embracing a lifelike robot. The image was created using Midjourney, the A.I. image generator, by Guerrero Art, an artist who works with A.I. technology.

Despite the implausibility of the image, it managed to fool several A.I.-image detectors.

Test results from the image of Mr. Musk: 2 out of 5 fooled.

The detectors, including versions that charge for access, such as Sensity, and free ones, such as Umm-maybe’s A.I. Art Detector, are designed to detect difficult-to-spot markers embedded in A.I.-generated images. They look for unusual patterns in how the pixels are arranged, including in their sharpness and contrast. Those signals tend to be generated when A.I. programs create images.

But the detectors ignore all context clues, so they don’t process the existence of a lifelike automaton in a photo with Mr. Musk as unlikely. That is one shortcoming of relying on the technology to detect fakes.

Several companies, including Sensity, Hive and Inholo, the company behind Illuminarty, did not dispute the results and said their systems were always improving to keep up with the latest advancements in A.I.-image generation. Hive added that its misclassifications may result when it analyzes lower-quality images. Umm-maybe and Optic, the company behind A.I. or Not, did not respond to requests for comment.

To conduct the tests, The Times gathered A.I. images from artists and researchers familiar with variations of generative tools such as Midjourney, Stable Diffusion and DALL-E, which can create realistic portraits of people and animals and lifelike portrayals of nature, real estate, food and more. The real images used came from The Times’s photo archive.

Here are seven examples:

A selection of test results

This A.I.-generated artwork of a smiling nun was created by Victoriano Izquierdo, a data scientist and artist who works with A.I.

GENERATED BY A.I.

Test results from the image of Smiling Nun: 1 out of 5 fooled.

This A.I.-generated artwork of an explosion near a government building circulated widely online. Despite some visible signs that it is not real, most detectors could not spot any anomalies.

GENERATED BY A.I.

Test results from the image of Explosion: 4 out of 5 fooled.

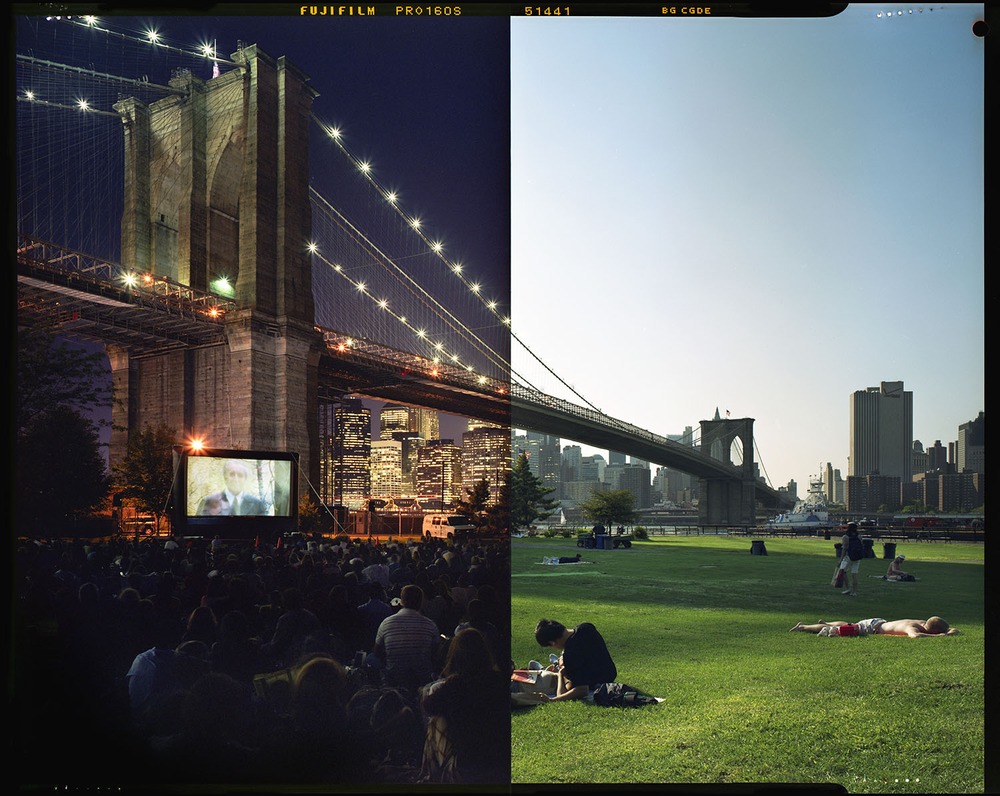

This real photograph, by Damon Winter, a photographer for The New York Times, was taken using two exposures — one in the day, with one half of the film covered, and one at night, with the cover removed. Most image detectors could still determine it was real.

REAL IMAGE

Test results from the image of Brooklyn Bridge: 1 out of 5 fooled.

This A.I.-generated artwork was created by Holly Alvarez, an artist who works with A.I. and is known as the Pumpkin Empress, in a series of images depicting satanic rituals inside libraries. The image was found circulating on far-right social media, where users claimed it depicted a genuine event.

GENERATED BY A.I.

Test results from the image of Satanic Children: 1 out of 5 fooled.

This A.I.-generated artwork of waves crashing onto a beach at sunset was created by Absolutely AI, a creative-content agency. It won a photography contest in February.

GENERATED BY A.I.

Test results from the image of Beach at Sunset: 3 out of 5 fooled.

This A.I.-generated artwork of a man who bears a resemblance to the actor Daniel Radcliffe was created by Julian van Dieken, an artist who works with A.I.

GENERATED BY A.I.

Test results from the image of Daniel Radcliffe Lookalike: 1 out of 5 fooled.

The long exposure on this photograph by Ashley Gilbertson gives the flowing water a supernatural appearance. Most A.I. detectors were not fooled.

REAL IMAGE

Test results from the image of Waterfall: 1 out of 5 fooled.

Detection technology has been heralded as one way to mitigate the harm from A.I. images.

A.I. experts like Chenhao Tan, an assistant professor of computer science at the University of Chicago and the director of its Chicago Human+AI research lab, are less convinced.

“In general I don’t think they’re great, and I’m not optimistic that they will be,” he said. “In the short term, it is possible that they will be able to perform with some accuracy, but in the long run, anything special a human does with images, A.I. will be able to re-create as well, and it will be very difficult to distinguish the difference.”

Most of the concern has been on lifelike portraits. Gov. Ron DeSantis of Florida, who is also a Republican candidate for president, was criticized after his campaign used A.I.-generated images in a post. Synthetically generated artwork that focuses on scenery has also caused confusion in political races.

Many of the companies behind A.I. detectors acknowledged that their tools were imperfect and warned of a technological arms race: The detectors must often play catch-up to A.I. systems that seem to be improving by the minute.

“Every time somebody builds a better generator, people build better discriminators, and then people use the better discriminator to build a better generator,” said Cynthia Rudin, a computer science and engineering professor at Duke University, where she is also the principal investigator at the Interpretable Machine Learning Lab. “The generators are designed to be able to fool a detector.”

Sometimes, the detectors fail even when an image is obviously fake.

Dan Lytle, an artist who works with A.I. and runs a TikTok account called The_AI_Experiment, asked Midjourney to create a vintage picture of a giant Neanderthal standing among normal men. It produced this aged portrait of a towering, Yeti-like beast next to a quaint couple.

GENERATED BY A.I.

Test results from the image of Giant: 5 out of 5 fooled.

The wrong result from each service tested demonstrates one drawback with the current A.I. detectors: They tend to struggle with images that have been altered from their original output or are of low quality, according to Kevin Guo, a founder and the chief executive of Hive, an image-detection tool.

When A.I. generators like Midjourney create photorealistic artwork, they pack the image with millions of pixels, each containing clues about its origins. “But if you distort it, if you resize it, lower the resolution, all that stuff, by definition you’re altering those pixels and that additional digital signal is going away,” Mr. Guo said.

When Hive, for example, ran a higher-resolution version of the Yeti artwork, it correctly determined the image was A.I.-generated.

Such shortfalls can undermine the potential for A.I. detectors to become a weapon against fake content. As images go viral online, they are often copied, resaved, shrunken or cropped, obscuring the important signals that A.I. detectors rely on. A new tool from Adobe Photoshop, known as generative fill, uses A.I. to expand a photo beyond its borders. (When tested on a photograph that was expanded using generative fill, the technology confused most detection services.)

The unusual portrait below, which shows President Biden, has much better resolution. It was taken in Gettysburg, Pa., by Damon Winter, the photographer for The Times.

Many of the detectors correctly thought the portrait was genuine; but not all did.

REAL IMAGE

Test results from the image of Biden Portrait: 1 out of 5 fooled.

Falsely labeling a genuine image as A.I.-generated is a significant risk with A.I. detectors. Sensity was able to correctly label most A.I. images as artificial. But the same tool incorrectly labeled many real photographs as A.I.-generated.

Those risks could extend to artists, who could be inaccurately accused of using A.I. tools in creating their artwork.

This Jackson Pollock painting, called “Convergence,” features the artist’s familiar, colorful paint splatters. Most – but not all – the A.I. detectors determined this was a real image and not an A.I.-generated replica.

REAL IMAGE

Test results from a painting by Pollock: 1 out of 5 fooled.

Illuminarty’s creators said they wanted a detector capable of identifying fake artwork, like paintings and drawings.

In the tests, Illuminarty correctly assessed most real photos as authentic, but labeled only about half the A.I. images as artificial. The tool, creators said, has an intentionally cautious design to avoid falsely accusing artists of using A.I.

Illuminarty’s tool, along with most other detectors, correctly identified a similar image in the style of Pollock that was created by The New York Times using Midjourney.

GENERATED BY A.I.

Test results from the image of a splatter painting: 1 out of 5 fooled.

A.I.-detection companies say their services are designed to help promote transparency and accountability, helping to flag misinformation, fraud, nonconsensual pornography, artistic dishonesty and other abuses of the technology. Industry experts warn that financial markets and voters could become vulnerable to A.I. trickery.

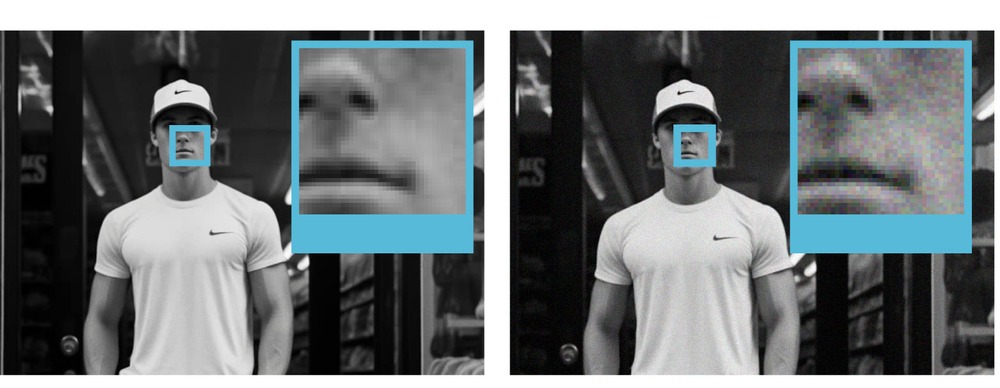

This image, in the style of a black-and-white portrait, is fairly convincing. It was created with Midjourney by Marc Fibbens, a New Zealand-based artist who works with A.I. Most of the A.I. detectors still managed to correctly identify it as fake.

GENERATED BY A.I.

Test results from the image of a man wearing Nike: 1 out of 5 fooled.

Yet the A.I. detectors struggled after just a bit of grain was introduced. Detectors like Hive suddenly believed the fake images were real photos.

The subtle texture, which was nearly invisible to the naked eye, interfered with its ability to analyze the pixels for signs of A.I.-generated content. Some companies are now trying to identify the use of A.I. in images by evaluating perspective or the size of subjects’ limbs, in addition to scrutinizing pixels.

3.3% likely to be A.I.-generated___________________________________99% likely to be A.I.-generated

No grain_____________________________________________________Grain added

Artificial intelligence is capable of generating more than realistic images – the technology is already creating text, audio and videos that have fooled professors, scammed consumers and been used in attempts to turn the tide of war.

A.I.-detection tools should not be the only defense, researchers said. Image creators should embed watermarks into their work, said S. Shyam Sundar, the director of the Center for Socially Responsible Artificial Intelligence at Pennsylvania State University. Websites could incorporate detection tools into their backends, he said, so that they can automatically identify A.I. images and serve them more carefully to users with warnings and limitations on how they are shared.

Images are especially powerful, Mr. Sundar said, because they “have that tendency to cause a visceral response. People are much more likely to believe their eyes.

https://www.nytimes.com/interactive/2023/06/28/technology/ai-detection-midjourney-stable-diffusion-dalle.html?